This paper presented a method based on forward spotting scheme that performs the gestural segmentation and recognition using the sliding windows and moving average HMMS. The sliding window captures and computes the observation probability of the gesture or non gesture using a number of continuous observations within the sliding window and thus computes the dynamics of the gesture without any abrupt start or end. Using the forward procedure, the averaged conditional probability is calculated for each observation window and HMM is trained using these probabilities measures and transitions. After training the required number of gestures, a non gesture model is also trained, which accounts for everything else that is not classified as the gesture. This model is trained by the same approach as for the gestures but using the non –gesture data as inputs.

In order to segment the gesture, author has argued that since gesture consists of number of postures, we can segment the gesture by dividing it into postures and then looking at the cumulative probability of the window. As per the author, for all gestural postures, the cumulative probability of the sequence will be higher than the non gestural probability and for the sequence of postures from non gesture, cumulative probability would be less than the non –gesture probability. Since we have small sliding windows, we can actually locate the position of the state at which probability became less and use that state for segmentation.

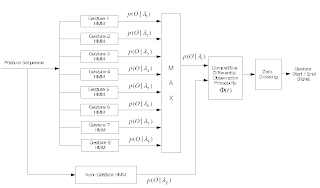

The complete system has a hierarchal structure where all HMM gestures are together in one class and there is a separate class for non-gesture HMM. A posture sequence provided as input is provided to the HMM Gesture class and to the non gesture class. The complete structure looks like a figure below.

It is similar to standard HMM except that we have non gestural HMM trained too which will give higher probability for the non gesture where as other gesture trained HMM’s would give higher probability for respective gestures.

Discussion:

I liked the approach of dividing the gestures into postures and then using the sequence of postures over small windows to determine the segmentation spot. However, I was disappointed by their simple observation sequence as each of the gesture was far more distinct and simple to what we are aiming at. Besides, computational time is also a questionable for this approach as it doesn’t look to me a real-time approach if we are looking at fine postures to frame a gesture. However, I believe for very distinct gestures like theirs we can use low resolution images which may work close to real-time. I believe the system is also very much user dependent as the non gestural movements may vary between person to person and some of the non gestural movements of one person may also correspond to gestural movements of another person (I mean it is not uncommon to do even stretching in different ways)

1 comment:

Excellent points about potential flaws of the system when exposed to different users. It does expose the the weaknesses that the paper did not address well...or at all. I want to like this paper, and I did before reading the other comments. I guess we'll have to find another paper that hopefully did better. Things are looking grim.

Post a Comment